Enabling new capabilities and improving user experience through voice

Alexa Voice Service (AVS) is a powerful way to add an intelligent voice service to control any connected product that has a microphone and speaker. Conversational assistant technology brings a rich world of capabilities to products through an intuitive “human” interface. AVS is pioneering this trend, not just in the connected home, but now in industrial, automotive, and many other markets.

Why Synapse?

Based on a track record of working with clients to translate their business strategy into technology solutions and working systems, Synapse is a qualified Alexa Consulting and Professional services provider.

With over 50 years of hardware product development expertise, we’re able to tackle some of the toughest problems of acoustics, signal processing, and power management for small form factors and noisy environments, while meeting aggressive cost and schedule targets. We know that contextual awareness is an opportunity to transform the relationship between people and voice interfaces, which is why we’re excited to integrate additional sensors into systems, opening the door to even more intuitive user experiences.

Learn more about our expertise in natural user interfaces.

Health and wellness

Supporting effortless interactions and monitoring

Smart home

Enabling intuitive UI through contextual awareness

Mobile use cases

Optimizing for power, leveraging AI at the edge

Commercial & Industrial

Handling unique language and environmental constraints

Enabling Voice in Noisy Environments

Synapse has developed systems that allow for voice interfaces in environments with too much ambient noise for most existing voice-enabled devices.

We use acoustic design, audio beamforming, echo cancellation, and noise floor reduction to maintain a reliable user experience. We are able to work closely with leading technology suppliers in sensing, signal processing, and acoustics, as well as developing custom or semi-custom audio DSP solutions.

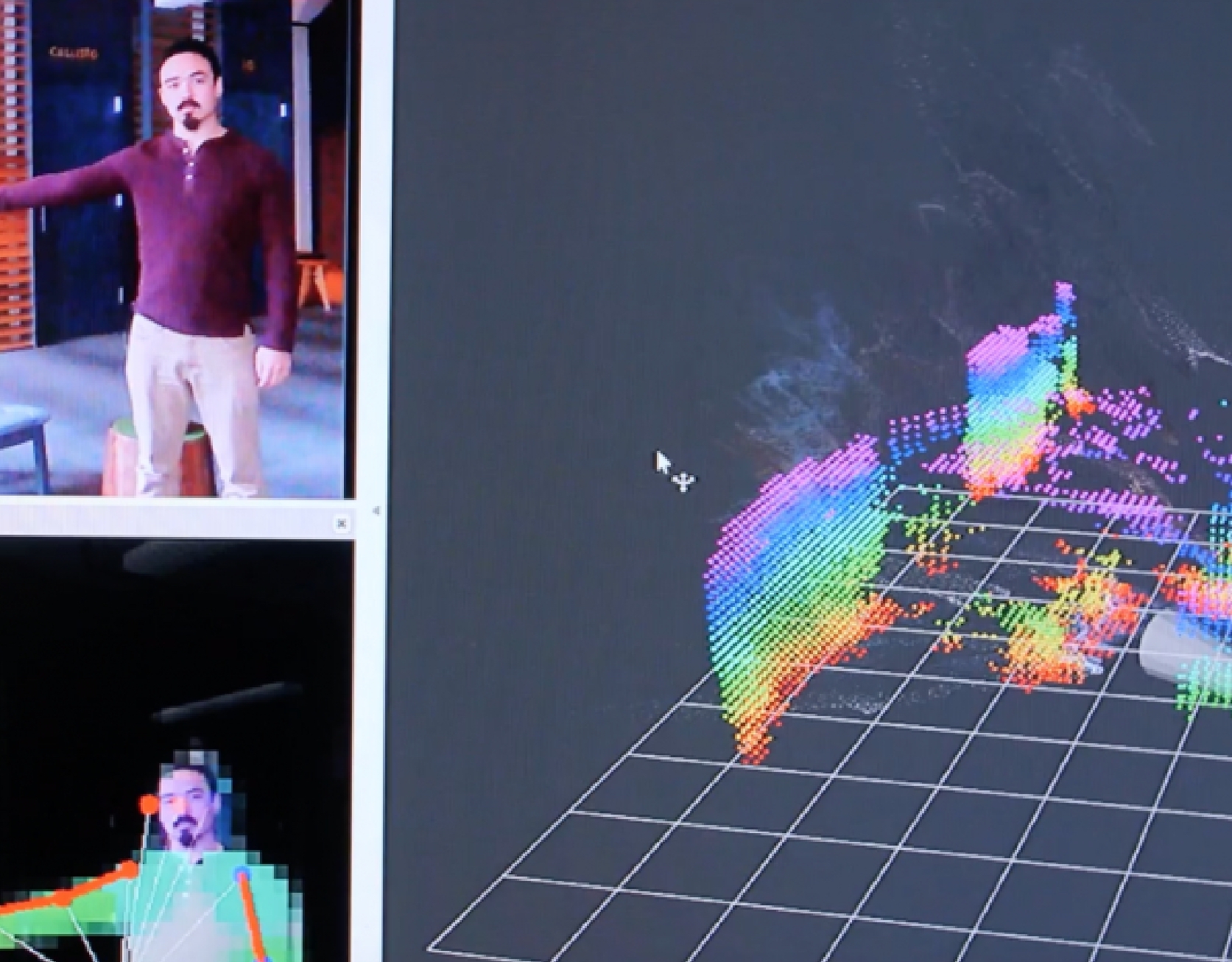

Bringing Additional Context to The “Conversation”

Our teams have created systems that detect a user’s gestures, gaze, and position relative to surrounding objects to make for more intuitive interactions.

We use these innovations to augment voice interfaces with context from computer vision, AI, and other sensors to allow the user to say, for example, “turn on that light” while pointing.

Synapse is an ideal Alexa Voice Service Consulting & Professional Services Provider because of their breadth of services and breakthrough innovation to transform their clients’ business and customer experience in digital and product design.

Adam Berns, Head of the Amazon Alexa Business Development