It’s Not Me, It’s Them

My Lyft picked me up at 5 am on an unseasonably warm Tuesday morning in March to drag me to SeaTac airport. I had an early morning flight to Detroit where I was attending the ADAS Sensors 2019 conference, which took place in Dearborn, right next to Ford’s global headquarters; still very much the heart of the North America automotive industry. My driver, Ulises, asked where I was headed, and I reluctantly indulged the conversation starter, explaining where I was going and for what reason. This quickly turned from a conversation about the current state of Advanced Driver Assistance Systems (ADAS) to a more broad conversation about autonomous vehicles (AVs). Ulises wasted no time arriving at the fairly common conclusion that he would never get in a car without a driver. I asked why and he listed off a number of his reasons, ranging from the lack of reliability of the systems (based on anecdotal and somewhat limited data) to the simple fact that he liked being in control. But he also expressed his frustration that, in his humble opinion, no one else knows how to drive properly. When I suggested AVs could help those drivers get around more smoothly and safely, he agreed, but insisted the same would not be true for himself. I enjoy these conversations because there is always such a wide range of comfort (or discomfort) with the prospect of a car taking over more and more of the driving operation. And this contradiction of wanting to remain in control while simultaneously not trusting other people — or entities — to do the same seems to be ubiquitous. It’s an entertaining yet baffling paradox, even at 5 o’clock in the morning.

“I’m a Really Good Driver” and Other Common Myths

The discussion around AVs highlights several fascinating human reactions, the most prevalent of which is the idea of dread risk. As the name suggests, dread risk is a risk that people have a particularly strong aversion to. Very often, the basis for this is rooted in a fundamental lack of control. A common example of dread risk is the fear of a plane crash. Although the vast majority of airline passengers are not trained pilots, there can be a particularly acute sense of taking a serious risk because you are fundamentally not in control of a situation that can cause you harm. We are orders of magnitude less likely to accept the risk of something that we do not control. Think of the scenario where you feel discomfort due to your rideshare driver beginning to drive in a way that you deem aggressive or wreckless; however when you drive in the exact same manner, you feel a significantly reduced amount of risk because you are in control. On a less trivial note, this may be why there are almost 40,000 motor vehicle deaths each year which are met with little to no change in safety legislation, yet the 1 death caused by a complicated failure of Uber’s self-driving technology caused widespread public discomfort and a stark reevaluation by automakers and government regulators of the technologies, requirements, and permissions involved in allowing these vehicles to continue testing on public roads. Put simply, we are generally uncomfortable handing over control of a basic adult skill to a suite of sensors and processors, and tend to see even the slightest failure of those systems as a reinforcement of a deeply held bias: they are not yet fully capable of mimicking human drivers (on their best days) and are therefore not trustworthy.

Put simply, we are generally uncomfortable handing over control of a basic adult skill to a suite of sensors and processors, and tend to see even the slightest failure of those systems as a reinforcement of a deeply held bias: they are not yet fully capable of mimicking human drivers on their best days and are therefore not trustworthy.

While the regulation and legislation debate over “how safe is safe enough” continues, AVs continue to dominate the discussion when assessing the intersection of mobility and technology in the future. Massive amounts of time and money are being dedicated to bringing this technology into the world, from Silicon Valley-based tech start-ups to 100+ year-old automotive juggernauts in the Motor City, and everything in between. And while self-driving cars are exciting and, undoubtedly, the transportation of the future, the initial meaningful deployment of sensors in current cars falls squarely under the broad category of ADAS.

The term ADAS encompasses a number of systems already on the road; things like lane guidance and lane departure detection, adaptive cruise control, and forward collision and blind spot warnings. Essentially these systems are the predecessors to self-driving systems, as they see, assess, and react to their surroundings in a similar fashion. Their relatively easy acceptance by drivers is due to the fact that they are either passive or voluntary; the driver can choose or not choose to engage them, or even ignore them, as in the case of blind spot warnings. There is compelling research suggesting that were these systems installed on all vehicles, they could reduce traffic accidents by 40% and fatalities by almost 30%. Furthermore, there are now signs that drivers are becoming too dependent on ADAS or overestimating its functionality. While this can be potentially dangerous, it indicates an interesting shift: drivers are starting to trust these systems more and more.

The Growth Path to Autonomous Vehicles Will Not Be Linear

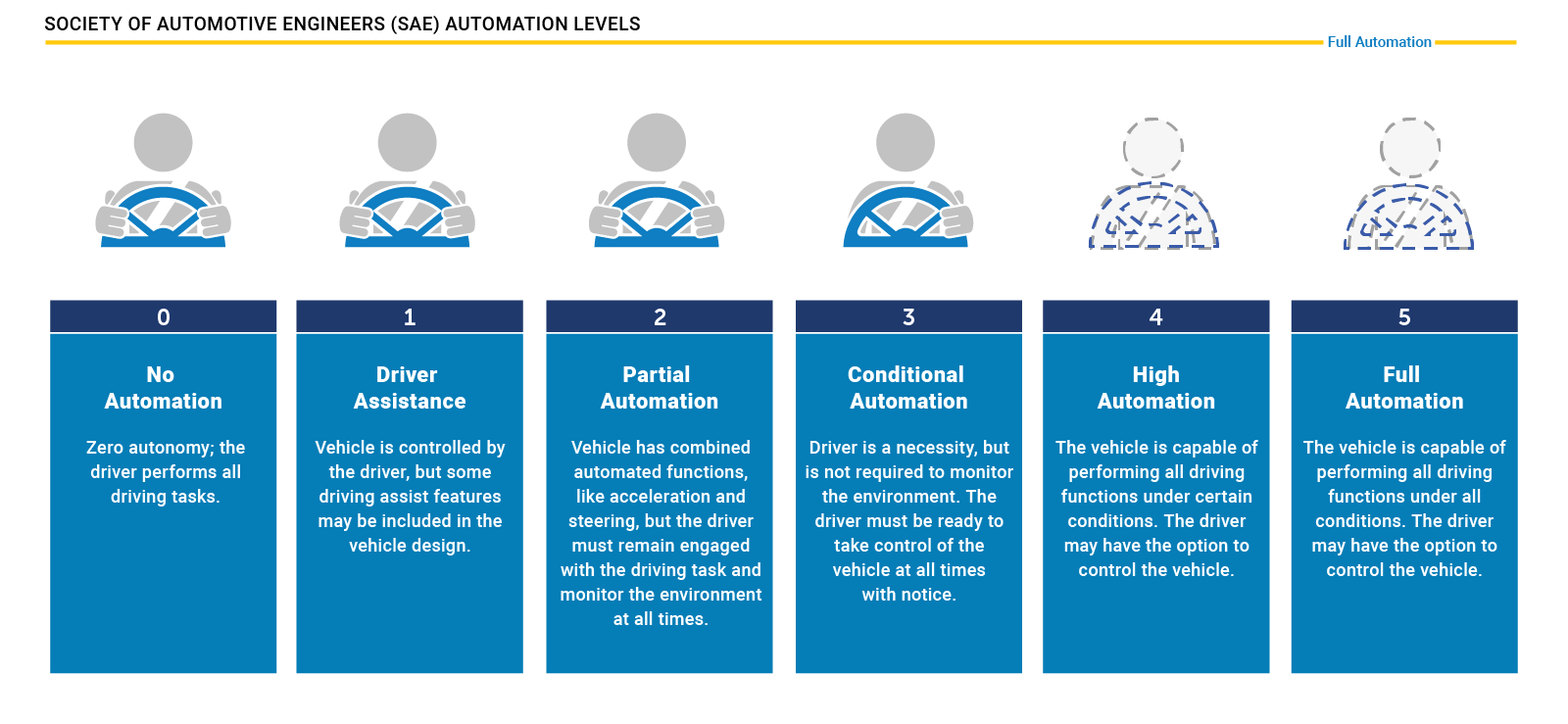

Many automakers and AV technology companies have predicted cars will be on the road sometime in the early 2020s, starting with “robotaxis” (autonomous transportation sold as a service) in specific geofenced urban areas. One of the biggest concerns, however, occurs at Level 3. It centers around the driver handoff procedure. By definition, Level 3 essentially states that the car will drive itself in most conditions until it encounters a situation that it cannot rectify. It will then require that the driver take over all operations of the vehicle. This is problematic because 1) the car is in a confusing situation, hence it initiates a handover, and 2) the driver is likely at their most distracted, as they haven’t been required to pay attention to the conditions that have created the confusing situation in question. This is leading many OEMs to implement what is being referred to as “Level 2+” (between Levels 2 and 3), or the most advanced use of ADAS. While this will reduce the liability of the automakers and sensor integrators, it may have the added benefit of slowly making drivers accustomed to more and more functions being taken over by the vehicle without losing all control.

Imagine you purchase a new car in a few years. Unlike the expensive technology upgrade packages of today, it has a standard full complement of sensor technology: radar, ultrasonic, RGB camera, thermal imaging, and high-accuracy GPS/GNSS linked to HD map sources. This allows the vehicle to know where it is at all times, monitor its surroundings, and maintain full functionality in adverse weather and at night. Your car has lane monitoring, blind spot detection, automated lane changing, adaptive cruise control, and automatic emergency braking. All of these functions now also work at slow speeds, e.g. stop-and-go rush hour traffic, not just highway cruising. Your car can parallel park itself or pull in and out of tight spots on its own, features once reserved for high-priced luxury vehicles. More importantly, it can now drop you off in front of your favorite store at the mall, and then move slowly about the geofenced parking lot to find a parking spot – a literal valet. You can see how you could become so accustomed to each of these functions, you begin to rely on them exclusively for the majority of your driving. If you visualize them as building blocks, you could string a dozen of them together and almost have what resembles a self-driving car. As you drive (or don’t drive) the car more and more, you build more and more trust in the systems. And, just as importantly, the car manufacturers continually collect massive amounts of situational driving data from your real-life scenarios, constantly training and improving the artificial intelligence the systems rely on, increasing their reliability and versatility. By the time you buy your next car in 2030, you have most likely become so accustomed to the convenience and reliability that it isn’t nearly the mental jump it once was to get into a Level 4 self-driving car, or Level 5 robotaxi for that matter. You have, slowly but surely, boiled yourself into acceptance as the AV water has heated up, probably without realizing it.

If you visualize [Advanced Driver Assistance Systems] as building blocks, you could string a dozen of them together and almost have what resembles a self-driving car.

This is why the ADAS industry is rapidly taking center stage as the focal point for the development of AV technology. The normalization of ADAS and our increasing utilization and dependence on those systems are the keys to overcoming the apprehension we have toward AVs. This will give the auto industry and other technology frontrunners time to improve sensor performance and reduce costs. They will be able to take huge strides towards increasing computing power and decreasing energy requirements. Perhaps most importantly, as is outlined in our white paper “AI in the driving seat”, they will collect the driving data needed to further develop and train the revolutionary algorithms that will lead to better decision making with more robust and reliable artificial intelligence, doing so while the stakes are low (and palatable) enough for universal adoption. And at the end of that road to autonomous vehicle adoption, maybe — just maybe — my Lyft driver will finally be willing to hop in an AV on his way to the airport.